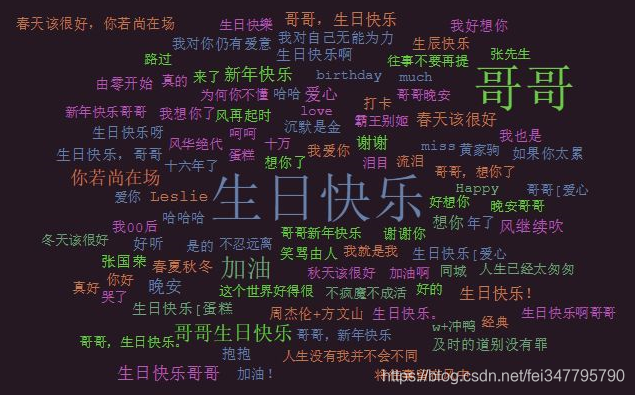

我们爬取了你在网易云音乐上,评论最多的八首歌曲。

它们依次是:《沉默是金》《春夏秋冬》《倩女幽魂》《当爱已成往事》《我》《风继续吹》《玻璃之情》《风再起时》。

总共64540条的评论中,出现最多的是“生日快乐”、“哥哥”、“加油”、“你若尚在场”、“新年快乐”和“哥哥,生日快乐”。

来,先给大家看看评论的代码。

import time

import requests

from fake_useragent import UserAgent

import random

import multiprocessing

import sys

'''

更多Python学习资料以及源码教程资料,可以在群1136201545免费获取

'''

#reload(sys)#sys.setdefaultencoding('utf-8')

ua = UserAgent(verify_ssl=False)

song_list = [{'186453':'春夏秋冬'},{'188204':'沉默是金'},{'188175':'倩女幽魂'},{'188489':'风继续吹'},{'187374':'我'},{'186760':'风雨起时'}]

headers = {

'Origin':'https://music.163.com',

'Referer': 'https://music.163.com/song?id=26620756',

'Host': 'music.163.com',

'User-Agent': ua.random

}

def get_comments(page,ite):

# 获取评论信息

# """

for key, values in ite.items():

song_id = key

song_name = values

ip_list = [IP列表]

url = 'http://music.163.com/api/v1/resource/comments/R_SO_4_'+ song_id +'?limit=20&offset=' + str(page)

proxies = get_random_ip(ip_list)

try:

response = requests.get(url=url, headers=headers,proxies=proxies)

except Exception as e:

print (page)

print (ite)

return 0

result = json.loads(response.text)

items = result['comments']

for item in items:

# 用户名

user_name = item['user']['nickname'].replace(',', ',')

# 用户ID

user_id = str(item['user']['userId'])

print(user_id)

# 评论内容

comment = item['content'].strip().replace('\n', '').replace(',', ',')

# 评论ID

comment_id = str(item['commentId'])

# 评论点赞数

praise = str(item['likedCount'])

# 评论时间

date = time.localtime(int(str(item['time'])[:10]))

date = time.strftime("%Y-%m-%d %H:%M:%S", date)

八首歌的歌词代码:

import requests

from bs4 import BeautifulSoup

import re

import json

import time

import random

import os

'''

更多Python学习资料以及源码教程资料,可以在群1136201545免费获取

'''

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3355.4 Safari/537.36',

'Referer': 'http://music.163.com',

'Host': 'music.163.com'

}

# 获取页面源码

def GetHtml(url):

try:

res = requests.get(url=url, headers=headers)

except:

return None

return res.text

# 提取歌手歌词信息

def GetSongsInfo(url):

print('[INFO]:Getting Songs Info...')

html = GetHtml(url)

soup = BeautifulSoup(html, 'lxml')

links = soup.find('ul', class_='f-hide').find_all('a')

if len(links) < 1:

print('[Warning]:_GetSongsInfo <links> not find...')

Info = {'ID': [], 'NAME': []}

for link in links:

SongID = link.get('href').split('=')[-1]

SongName = link.get_text()

Info['ID'].append(SongID)

Info['NAME'].append(SongName)

# print(Info)

return Info

def GetLyrics(SongID):

print('[INFO]:Getting %s lyric...' % SongID)

ApiUrl = 'http://music.163.com/api/song/lyric?id={}&lv=1&kv=1&tv=-1'.format(SongID)

html = GetHtml(ApiUrl)

html_json = json.loads(html)

temp = html_json['lrc']['lyric']

rule = re.compile(r'\[.*\]')

lyric = re.sub(rule, '', temp).strip()

print(lyric)

return lyric

def main():

SingerId = input('Enter the Singer ID:')

url = 'http://music.163.com/artist?id={}'.format(SingerId)

# url = "http://music.163.com/artist?id=6457"

Info = GetSongsInfo(url)

IDs = Info['ID']

i = 0

for ID in IDs:

lyric = GetLyrics(ID)

SaveLyrics(Info['NAME'][i], lyric)

i += 1

time.sleep(random.random() * 3)

# print('[INFO]:All Done...')

def SaveLyrics(SongName, lyric):

print('[INFO]: Start to Save {}...'.format(SongName))

if not os.path.isdir('./results'):

os.makedirs('./results')

with open('./results/{}.txt'.format(SongName), 'w', encoding='utf-8') as f:

f.write(lyric)